Take a look at the list of exhibitors and sponsors of Syndicate, going on right now in lovely San Francisco. How many web analytics vendors do you see on the list? None? Any bets on what that list might look like at next year's conference?

There are many companies there who are building analytics solutions. Take a look at some of the approaches these folks are taking. Very creative ideas indeed.

facebook sdk

google tag manager

Thursday, December 15, 2005

Tuesday, December 13, 2005

Web Analytics Data Changing User Behavior?

Many of us are familiar with providing web analytics data via an overlay. I believe Fireclick was first to this party with their Site Explorer tool...WebTrends has a product called SmartView...other vendors have their own versions as well. This rich analytics data has historically been viewed only by those using these analytics tools.

Whether or not you like the term Web 2.0, many of the new implementations of the web-as-a-platform are changing how we think of analytics. Ajax is changing how we think of tracking visitors, visits and views. API's are opening up everywhere, providing a healthy exchange of data. Del.icio.us is my favorite example of a disruptive tool, as they change our paradigms of saving, sharing and searching.

So, how about web analytics? What's new and interesting and disruptive in our world? Here's one approach that I like. Mybloglog.com, previously noted here, looks to have an API that shows a lot of promise. I can't find any information on their site about it, but you can see an example of what they're doing at this cool Del.icio.us tool site (hover over the links in the article).

Wow. Are visitors more likely to click on the links that are rated higher, thus changing visitor behavior? Perhaps so. Amazon has done this for years. Others do as well, but I've not seen someone directly using web analytics data to do this before. Are there other examples out there?

Whether or not you like the term Web 2.0, many of the new implementations of the web-as-a-platform are changing how we think of analytics. Ajax is changing how we think of tracking visitors, visits and views. API's are opening up everywhere, providing a healthy exchange of data. Del.icio.us is my favorite example of a disruptive tool, as they change our paradigms of saving, sharing and searching.

So, how about web analytics? What's new and interesting and disruptive in our world? Here's one approach that I like. Mybloglog.com, previously noted here, looks to have an API that shows a lot of promise. I can't find any information on their site about it, but you can see an example of what they're doing at this cool Del.icio.us tool site (hover over the links in the article).

Wow. Are visitors more likely to click on the links that are rated higher, thus changing visitor behavior? Perhaps so. Amazon has done this for years. Others do as well, but I've not seen someone directly using web analytics data to do this before. Are there other examples out there?

Filed in: analytics technology

Monday, December 12, 2005

Web Analytics and Feeds #1: Feedburner

First in a series on analyzing feeds...

Feedburner is an excellent service for bloggers. They provide some terrific tools to easily expand the presence of a blog. They have methods to help publicize, optimize, monitize, analyze and yes, even troubleshootize (honest!) your feed.

Analysis Tools

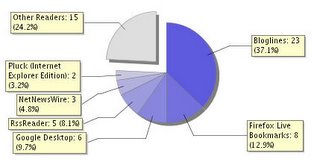

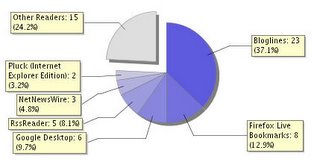

Their free analysis tools are fairly simple, but quite useful. Feed Circulation gives me an aggregate number of subscribers on a daily basis. Since they provide this stat through their Awareness API too, I have a widget on my desktop that gives me my updated number each day. They also provide a further breakdown of my Readership, showing the number of subscribers by reader. Note: now that 23 of you subscribe via Bloglines, according to nice folks over at Ask Jeeves...this is a blog that matters (although, they've probably upped the requirement since October's Web 2.0 conference :-)

They also provide a further breakdown of my Readership, showing the number of subscribers by reader. Note: now that 23 of you subscribe via Bloglines, according to nice folks over at Ask Jeeves...this is a blog that matters (although, they've probably upped the requirement since October's Web 2.0 conference :-)

Collecting Feed Subscribers

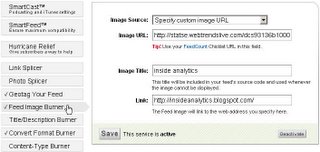

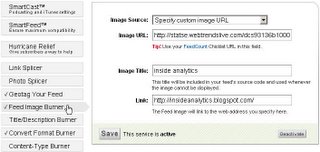

Getting this data from Feedburner is very handy, but I wanted to tie back my subscribers to the Feedburner feeds to my other analysis tools. There are a couple of ways to do this, but the simplest method is to add an image request into Feedburner's "Feed Image Burner", as shown below.

This is normally used to provide an image to readers when displaying articles, but I hijacked this image location to add an analytics image request. Geek note: In RSS 2.0, this image is in channel->image->url, in Atom, I believe the atom:logo element would work the same. In order to include requests for my feed in my web analytics data, I modified the "noscript" image request from my WebTrends code to insert into the "Image URL" field. The image request looks like this:

Cool. This gives me a separate breakdown in my WebTrends reports showing requests to the URI "Feedburner.feed". Requests to the image are made when someone actually reads the feed, not when the reader picks up the feed. (Note: I'll figure out an equivalent URL for MeasureMap and Google Analytics, and post that in case anyone is interested.)

Feedburner Issue

Back to Feedburner. I noticed an issue that I need to research further. I was originally using their "SmartFeed" service to translate the feed format to be compatible with the subscriber's application. It seemed to strip out the image, even though it showed it correctly inserted in the "XML Source" in the Feedburner UI. I disabled the SmartFeed service, and set the "Convert Format Burner" to RSS 2.0, and all worked great. I can now analyze when the feeds are viewed in a reader (for those readers that display an image of course).

compatible with the subscriber's application. It seemed to strip out the image, even though it showed it correctly inserted in the "XML Source" in the Feedburner UI. I disabled the SmartFeed service, and set the "Convert Format Burner" to RSS 2.0, and all worked great. I can now analyze when the feeds are viewed in a reader (for those readers that display an image of course).

More complications

There's more to this story. I now have some visibility into (at least a partial list of) who is reading my feeds. But, are they visiting the site? More on this conversion in a subsequent post...

Feedburner is an excellent service for bloggers. They provide some terrific tools to easily expand the presence of a blog. They have methods to help publicize, optimize, monitize, analyze and yes, even troubleshootize (honest!) your feed.

Analysis Tools

Their free analysis tools are fairly simple, but quite useful. Feed Circulation gives me an aggregate number of subscribers on a daily basis. Since they provide this stat through their Awareness API too, I have a widget on my desktop that gives me my updated number each day.

They also provide a further breakdown of my Readership, showing the number of subscribers by reader. Note: now that 23 of you subscribe via Bloglines, according to nice folks over at Ask Jeeves...this is a blog that matters (although, they've probably upped the requirement since October's Web 2.0 conference :-)

They also provide a further breakdown of my Readership, showing the number of subscribers by reader. Note: now that 23 of you subscribe via Bloglines, according to nice folks over at Ask Jeeves...this is a blog that matters (although, they've probably upped the requirement since October's Web 2.0 conference :-)Collecting Feed Subscribers

Getting this data from Feedburner is very handy, but I wanted to tie back my subscribers to the Feedburner feeds to my other analysis tools. There are a couple of ways to do this, but the simplest method is to add an image request into Feedburner's "Feed Image Burner", as shown below.

This is normally used to provide an image to readers when displaying articles, but I hijacked this image location to add an analytics image request. Geek note: In RSS 2.0, this image is in channel->image->url, in Atom, I believe the atom:logo element would work the same. In order to include requests for my feed in my web analytics data, I modified the "noscript" image request from my WebTrends code to insert into the "Image URL" field. The image request looks like this:

http://statse.webtrendslive.com/dcs93136b10000004rrmeb3jo_5u5i/njs.gif?

dcsuri=/Feedburner.feed&WT.js=No&WT.ti=FeedBurnerFeed

Cool. This gives me a separate breakdown in my WebTrends reports showing requests to the URI "Feedburner.feed". Requests to the image are made when someone actually reads the feed, not when the reader picks up the feed. (Note: I'll figure out an equivalent URL for MeasureMap and Google Analytics, and post that in case anyone is interested.)

Feedburner Issue

Back to Feedburner. I noticed an issue that I need to research further. I was originally using their "SmartFeed" service to translate the feed format to be

compatible with the subscriber's application. It seemed to strip out the image, even though it showed it correctly inserted in the "XML Source" in the Feedburner UI. I disabled the SmartFeed service, and set the "Convert Format Burner" to RSS 2.0, and all worked great. I can now analyze when the feeds are viewed in a reader (for those readers that display an image of course).

compatible with the subscriber's application. It seemed to strip out the image, even though it showed it correctly inserted in the "XML Source" in the Feedburner UI. I disabled the SmartFeed service, and set the "Convert Format Burner" to RSS 2.0, and all worked great. I can now analyze when the feeds are viewed in a reader (for those readers that display an image of course).More complications

There's more to this story. I now have some visibility into (at least a partial list of) who is reading my feeds. But, are they visiting the site? More on this conversion in a subsequent post...

Filed in: analytics feedburner rss

Friday, December 09, 2005

Google Transit - WA Wednesday

Another great idea from the "20% percent time" policy Google offers its engineers. This one pulls together transit schedules and Google Maps in a very slick mashup.

They chose Portland for the first launch. Those of us who live in Portland know the Tri-Met transit system is excellent. Google puts it this way in their blog:

Fantastic stuff. Oh, and if you're downtown next Wednesday evening and need to get to the Lucky Labrador for the Web Analytics Wednesday chat, this link might help.

Or, if you're out near Intel in Hillsboro today and want to get downtown to see the national champion Portland Pilots women's soccer team celebration at noon, try this.

They chose Portland for the first launch. Those of us who live in Portland know the Tri-Met transit system is excellent. Google puts it this way in their blog:

We chose to launch with the Portland metro area for a couple of reasons. Tri-Met, Portland's transit authority, is a technological leader in public transportation. The team at Tri-Met is a group of tremendously passionate people dedicated to serving their community. And Tri-Met has a wealth of data readily available that they were eager to share with us for this project. This combination of great people and great data made Tri-Met the ideal partner.

Fantastic stuff. Oh, and if you're downtown next Wednesday evening and need to get to the Lucky Labrador for the Web Analytics Wednesday chat, this link might help.

Or, if you're out near Intel in Hillsboro today and want to get downtown to see the national champion Portland Pilots women's soccer team celebration at noon, try this.

Tuesday, December 06, 2005

Kanoodle Has It Backwards

ClickZ reports that Kanoodle is going to pay publishers to distribute their "targeting" cookies. Kanoodle's BrightAds program says:

I'll give them an A for creativity, and for trying to amass an internet-visitor database as only Google can do right now (with Yahoo close behind).

However, this program is doomed for a couple of reasons. The most obvious reason is that third party cookies are dying a quick death. My own personal prediction is that third party cookies will be useless by the end of 2006. Since this program relies on third party cookies, they're data will be useless.

The second reason this effort will fail is because they (and others) won't be able to "own" visitor data...visitors will. AttentionTrust efforts will put preference data in the hands of visitors, which will fundamentally alter the advertising approach taken by these companies. 2006 will be an interesting time for Attention...

"Add our code to your page, and we'll drop cookies, so when a user visits another site in the Kanoodle network, we can show them ads that will appeal to them."

I'll give them an A for creativity, and for trying to amass an internet-visitor database as only Google can do right now (with Yahoo close behind).

However, this program is doomed for a couple of reasons. The most obvious reason is that third party cookies are dying a quick death. My own personal prediction is that third party cookies will be useless by the end of 2006. Since this program relies on third party cookies, they're data will be useless.

The second reason this effort will fail is because they (and others) won't be able to "own" visitor data...visitors will. AttentionTrust efforts will put preference data in the hands of visitors, which will fundamentally alter the advertising approach taken by these companies. 2006 will be an interesting time for Attention...

Monday, December 05, 2005

Privacy Policy for Inside Analytics

Your privacy is important to me. I do collect visitor information using a few different services, and experiment occaisionally with various tools. However, I will always adhere to the following privacy standards:

Note 1: The information below is equivalent to the following compact P3P (which I cannot set in the HTTP header using Blogger, but I do include this information in a meta tag in my template):

Note 2: My XML Privacy Report is available at this location: http://eblog.bugbee.org/w3c/p3p.xml. If you visit my "support" site with IE, you can see this file in IE's Privacy Report.

Please email me (elbpdx at gmail.com) if you have any questions about this post or this policy.

Note 1: The information below is equivalent to the following compact P3P (which I cannot set in the HTTP header using Blogger, but I do include this information in a meta tag in my template):

NON DSP COR ADM DEV PSA OUR IND UNI COM NAV STA INT

NON: Unfortunately, visitors do not have access to view the information I collect. However I will attempt to share aggregated information about the traffic to this site on a regular basis.

DSP: There may be some disputes about my privacy practices. I don't know of any...but there may be some.

COR: If there are any disputes, I will work to correct them.

ADM: Information collected will help me better administer this site.

DEV: Information collected will help me develop this site.

PSA: Information collected will help me understand (psuedo-analysis) the habits and interests of the visitors to this site, but will not be tied back to any individually identified data.

OUR: The information is ours (mine), and I keep it. It is not now, nor will it ever be, given to any third party.

IND: I plan on keeping the information I collect indefinitely.

UNI: I use unique ID's to identify visitors. This helps me understand visitor preferences over time.

COM: I collect limited information about visitor computer systems, browsers, operating systems, screen resolutions, software, etc., that is provided by the browser, or via javascript.

NAV: I collect navigation information, including pages visited, referrers and offsite links.

STA: I use cookies to maintain visitor identifiers and some limited state information.

INT: I collect search terms used to arrive at this site to better understand visitor traffic and navigation flow.

Note 2: My XML Privacy Report is available at this location: http://eblog.bugbee.org/w3c/p3p.xml. If you visit my "support" site with IE, you can see this file in IE's Privacy Report.

Please email me (elbpdx at gmail.com) if you have any questions about this post or this policy.

Filed in: privacy

Thursday, December 01, 2005

Measuring Visit Duration in Hours

It is definitely possible to go overboard with visitor tracking. I visited one site last week (I wish I saved the URL!!! Update: It's an applet on Information Week...check out this article on Google Analytics as an example...they're using an On24 flash applet to deliver News TV...the On24 applet collects data through Limelight...as irony would have it, On24 uses Google Analytics on their site) that sent a tracking hit every second! What could they possibly do with that information? I guess they believe they will know more precisely what each visit duration was. But once you start accounting for all of the data anomalies generated by visitors who keep their browser open longer than the actual visit occurred, the data is no longer precise at all.

There are some examples of when visit duration tracking could be very useful (and much more accurate), especially when bundled with a feature. As I type this article, Blogger's auto-save feature is sending a "status" hit every minute or so, which has the dual purpose of saving my draft as I type, and giving Blogger some pretty accurate information about how long folks take to write (and edit!) articles. (Geek sidebar: they use a GET request with the draft text stored in cookies...I wonder what happens when the article is larger than 4k bytes?).

Gmail uses a similar approach. Use the CustomizeGoogle Firefox extension with Google Suggest selected, and every letter you type in the search bar is sent to Google. Wow.

Here's a fun use of technology, brought to you by the advertising geniuses at Virgin Digital. Warning: If you like rock music, trivia, and have a few minutes to spare, be prepared to actually spend a few hours on this, and of course...they're watching how long you spend on the game (we've only found 62 of 74!).

There are some examples of when visit duration tracking could be very useful (and much more accurate), especially when bundled with a feature. As I type this article, Blogger's auto-save feature is sending a "status" hit every minute or so, which has the dual purpose of saving my draft as I type, and giving Blogger some pretty accurate information about how long folks take to write (and edit!) articles. (Geek sidebar: they use a GET request with the draft text stored in cookies...I wonder what happens when the article is larger than 4k bytes?).

Gmail uses a similar approach. Use the CustomizeGoogle Firefox extension with Google Suggest selected, and every letter you type in the search bar is sent to Google. Wow.

Here's a fun use of technology, brought to you by the advertising geniuses at Virgin Digital. Warning: If you like rock music, trivia, and have a few minutes to spare, be prepared to actually spend a few hours on this, and of course...they're watching how long you spend on the game (we've only found 62 of 74!).

Wednesday, November 30, 2005

Are Ads in Blogs Successful?

Ads have been present in blogs for awhile now, and larger companies are getting into the act. The NYTimes reported that Budget Rent a Car ran a four week promotional campaign by inserting ads in 177 targeted blogs. They spent $20k, received 19.9M impressions and 60k click-throughs to the Budget blog. $.33 CPC. Is that good? Does anyone else have any blog ad CPC to compare?

An interesting quote from the article:

On a slightly separate note, ABSOLUT has announced that their print, TV and outdoor advertising would be taking a "back seat to the Web". This is just one example of a very big shift...will we be seeing their ads in any of our favorite blogs? (They have a great website btw...worth checking out).

An interesting quote from the article:

Even so, "the jury's still out on the metrics," Mr. Deaver said. "I'd be lying if I said I know what to measure to determine success."This is a good opportunity for bloggers to consider better understanding their audience, so they can get in on the advertising action. I'm not talking about Adsense-Overture advertising where site content (and visitor preferences!) dictate which ads are shown. I'm more intrigued by seeing that marketing consultants are checking Technorati for information about the relevancy of the blog to determine where to place ads. Know thy visitors!

On a slightly separate note, ABSOLUT has announced that their print, TV and outdoor advertising would be taking a "back seat to the Web". This is just one example of a very big shift...will we be seeing their ads in any of our favorite blogs? (They have a great website btw...worth checking out).

Filed in: advertising analytics blog

Monday, November 28, 2005

What Are They Missing at the Harvard Business School?

Ok...head on over to this ivy-covered article and then come on back. What are they missing? Wouldn't it be great if they were also helping current and future business leaders understand how important it is to measure success with their web presence? If someone is going to take the time to write openly and honestly about their expanding business, future directions, or their ups and downs, I'm sure they're going to want to know a little about their audience and what keeps them coming back (and where did they come from, and how often do they come back, and do they visit other properties, etc, etc.).

I believe our friends at UBC could help the folks in Cambridge augment their curriculum a bit. We wouldn't want the mighty Crimson falling behind the curve... (disclaimer: my wife is a big red alum)

Thanks Dustin!

I believe our friends at UBC could help the folks in Cambridge augment their curriculum a bit. We wouldn't want the mighty Crimson falling behind the curve... (disclaimer: my wife is a big red alum)

Thanks Dustin!

Filed in: analytics

Thursday, November 17, 2005

Are Mars and Venus close enough?

Guy Creese notes that vendors often don't seem to understand their customers. He cites a couple of examples where training and support were extremely important factors to customer success (and vendor selection). No doubt. It is an excellent time to be a vendor right now, as long as you're paying close attention to your customers needs and requirements (and innovating!).

Guy notes that, especially in a maturing market like web analytics, execution is critical to success. In a parallel industry to ours, blogging tools, the market is less mature, and the tools are far less complex, but customers are no less forgiving. As I noted previously, SixApart has had some growing pains recently with their Typepad service, and won praise for communicating well with their customers during the tough times.

This week, they went a step further to mend the fences. Typepad customers received an email from Barak Berkowitz, the CEO of SixApart. In the email, customers were given options as to how much of a credit they would like, based on how much of a disruption the customer felt the recent service issues caused them.

Good idea. Taking care of customers. Others thought so too. Now they need to execute!

Guy notes that, especially in a maturing market like web analytics, execution is critical to success. In a parallel industry to ours, blogging tools, the market is less mature, and the tools are far less complex, but customers are no less forgiving. As I noted previously, SixApart has had some growing pains recently with their Typepad service, and won praise for communicating well with their customers during the tough times.

This week, they went a step further to mend the fences. Typepad customers received an email from Barak Berkowitz, the CEO of SixApart. In the email, customers were given options as to how much of a credit they would like, based on how much of a disruption the customer felt the recent service issues caused them.

Good idea. Taking care of customers. Others thought so too. Now they need to execute!

Filed in: operations

Monday, November 14, 2005

Open Source is Quick(er than Google)!

Xavier Casanova notes that folks have already created a Wordpress plugin that will add the Google Analytics (GA) code to each blog page automatically. An excellent idea. Matt Labrum created the plugin, and it's really very straightforward...and probably very useful for Wordpress users...nice work.

Two things strike me as interesting about this. First, that this plugin looks so easy to put together, and was created so quickly for a GA implementation. Of course, many other integrations have been created between content management tools and web analytics vendors. But this tool will help Google obtain faster adoption with the (growing) Wordpress crowd. I'm sure other greasemonkey scripts and plugins will follow to help enable the blogging crowd to better leverage GA. And google doesn't even have to lift a finger! Wow.

The second thing that's interesting to me about this situation is how google's blogger tool (which I'm using for this site) isn't google analytics "aware". I was curious how google was implementing analytics into blogger, so I ran a quick search in the blogger help before posting this article and got this:

Not surprising really...Google is a big company now. They have so many simultaneous projects going on, it must be a real chore to keep up with everything. I'm sure Blogger will be adding some nifty features soon. In the meantime, it's very cool to see others out there innovating tools quickly!

Two things strike me as interesting about this. First, that this plugin looks so easy to put together, and was created so quickly for a GA implementation. Of course, many other integrations have been created between content management tools and web analytics vendors. But this tool will help Google obtain faster adoption with the (growing) Wordpress crowd. I'm sure other greasemonkey scripts and plugins will follow to help enable the blogging crowd to better leverage GA. And google doesn't even have to lift a finger! Wow.

The second thing that's interesting to me about this situation is how google's blogger tool (which I'm using for this site) isn't google analytics "aware". I was curious how google was implementing analytics into blogger, so I ran a quick search in the blogger help before posting this article and got this:

Not surprising really...Google is a big company now. They have so many simultaneous projects going on, it must be a real chore to keep up with everything. I'm sure Blogger will be adding some nifty features soon. In the meantime, it's very cool to see others out there innovating tools quickly!

Tuesday, November 08, 2005

LiveJournal and Privacy Issues

KevinK posted a very interesting article that everyone in web analytics should read. It covers the deployment and subsequent removal of a web analytics tracking tool on the LiveJournal site.

Web analytics vendors walk a very fine line with regard to privacy. Third party tools collect almost any data that customers would like to forward to them. Customers can sometimes make mistakes and send information that their visitors would suggest is personally identifiable (as in this case with LiveJournal users).

Some important takeaways for me from the article:

Note 1: Vendors need to make sure their customers understand everything that is and/or can be collected with their javascript.

-------------------

Note 2: Vendor privacy policies are extremely important and must accurately reflect exactly what they say they will do with the data they collect.

-------------------

Note 3: This is serious business. Vendors must be very clear about privacy issues and regulations surrounding the data they collect. COPPA is just one example...there are many others.

-------------------

I'm impressed with LiveJournal's thoughtful response on this topic that has clearly upset some of their users. All site operators should make sure they review their privacy policy on a regular basis and match what they are saying with the data they are collecting.

Also, for sites that determine they collect sensitive data that seems inappropriate to send to a third party collection environment, they should investigate options to keep the data in-house.

Web analytics vendors walk a very fine line with regard to privacy. Third party tools collect almost any data that customers would like to forward to them. Customers can sometimes make mistakes and send information that their visitors would suggest is personally identifiable (as in this case with LiveJournal users).

Some important takeaways for me from the article:

"Since the [vendor] javascript is complex and hard-to-deconstruct, we cannot say unequivocally what else, if anything, the code also tries to collect."

Note 1: Vendors need to make sure their customers understand everything that is and/or can be collected with their javascript.

-------------------

"[Vendor] is legally bound to do nothing with the information other than report it back to us as anonymous and aggregate data. That's a strict legal commitment they've made to us and they make publicly via their privacy policy."

Note 2: Vendor privacy policies are extremely important and must accurately reflect exactly what they say they will do with the data they collect.

-------------------

"COPPA -- we completely respect the letter and spirit of COPPA (refresher). Again, we do not share personally identifiable information with [vendor] or enable [vendor] to collect it about any users, including those under 13. There are two types of under 13 users on LJ -- those whose parents have given us permission and those who have not and therefore can only view public pages but cannot use the application."

Note 3: This is serious business. Vendors must be very clear about privacy issues and regulations surrounding the data they collect. COPPA is just one example...there are many others.

-------------------

I'm impressed with LiveJournal's thoughtful response on this topic that has clearly upset some of their users. All site operators should make sure they review their privacy policy on a regular basis and match what they are saying with the data they are collecting.

Also, for sites that determine they collect sensitive data that seems inappropriate to send to a third party collection environment, they should investigate options to keep the data in-house.

Monday, October 31, 2005

A Mashup: Mapping Visitors

I thought it might be interesting to see a map of where visitors to the LiquidSculpture site are located. I first started down the Google maps approach, but then I came across a very creative Excel macro that Jeffrey McManus created for Yahoo! Maps that was easy to modify and use (thanks Jeffrey!).

Data Gathering

The idea is relatively simple. Create a spreadsheet of geography data from your analysis tool, then run a macro against it to create the XML data to feed into Yahoo! Maps. So, let's start with gathering the geography data.

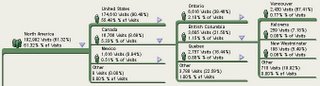

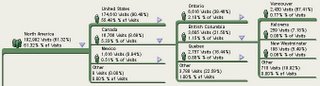

Using WebTrends, I ran a Geography Drilldown report for the month of October. You c an see in this pie chart that ~61% of the visits to LiquidSculpture were from North America. The Yahoo! Maps data is limited to North America as far as I can tell, so we'll stick to that geography for now.

an see in this pie chart that ~61% of the visits to LiquidSculpture were from North America. The Yahoo! Maps data is limited to North America as far as I can tell, so we'll stick to that geography for now.

Drilldown Data

This drilldown shows further granularity into the data (North America -> Canada -> British Columbia -> Vancouver), noting that visits from visitors in Vancouver, B.C., make up .77% of the overall visits during the month. Martin demonstrated his photography at a show in the San Juan Islands a year ago, perhaps his Vancouver visitors should get a special invitation next time he's up in that neck of the woods.

Get Out The Map

So, let's get some data on a map. Now that we have the data, we need to export it. After running the Geography Drilldown report in WebTrends, I exported the data to a CSV file (you could use the Excel export functionality too, but the CSV export works great in this example). I then modified Jeffrey's Excel template to select the cities out of the exported data to create the map below.

You can see the map above by referring to this link which pulls the XML data from a saved file.

You can see the map above by referring to this link which pulls the XML data from a saved file.

Technical Notes

Yahoo! Maps is easy to use and very flexible. You create a XML file and feed it to their API. There are other tools available via the API that I'm not taking advantage of - perhaps if there's enough interest, I'll dig into it further.

If you use WebTrends, you should be able to use the same Excel template I used to map your visit data on Yahoo maps. Please note that this is not a WebTrends product. It is not endorsed or supported by WebTrends. I'd be happy to help provide some help if you are having difficulty using it. Even if you don't use WebTrends, but your analytics package provides you with geography data that you'd like to map, you're welcome to use my template, or grab Jeffrey's original template and hack away.

My template includes a few changes to the original:

Let me know if you have any feedback or questions on this.

Data Gathering

The idea is relatively simple. Create a spreadsheet of geography data from your analysis tool, then run a macro against it to create the XML data to feed into Yahoo! Maps. So, let's start with gathering the geography data.

Using WebTrends, I ran a Geography Drilldown report for the month of October. You c

an see in this pie chart that ~61% of the visits to LiquidSculpture were from North America. The Yahoo! Maps data is limited to North America as far as I can tell, so we'll stick to that geography for now.

an see in this pie chart that ~61% of the visits to LiquidSculpture were from North America. The Yahoo! Maps data is limited to North America as far as I can tell, so we'll stick to that geography for now.Drilldown Data

This drilldown shows further granularity into the data (North America -> Canada -> British Columbia -> Vancouver), noting that visits from visitors in Vancouver, B.C., make up .77% of the overall visits during the month. Martin demonstrated his photography at a show in the San Juan Islands a year ago, perhaps his Vancouver visitors should get a special invitation next time he's up in that neck of the woods.

Get Out The Map

So, let's get some data on a map. Now that we have the data, we need to export it. After running the Geography Drilldown report in WebTrends, I exported the data to a CSV file (you could use the Excel export functionality too, but the CSV export works great in this example). I then modified Jeffrey's Excel template to select the cities out of the exported data to create the map below.

You can see the map above by referring to this link which pulls the XML data from a saved file.

You can see the map above by referring to this link which pulls the XML data from a saved file.Technical Notes

Yahoo! Maps is easy to use and very flexible. You create a XML file and feed it to their API. There are other tools available via the API that I'm not taking advantage of - perhaps if there's enough interest, I'll dig into it further.

If you use WebTrends, you should be able to use the same Excel template I used to map your visit data on Yahoo maps. Please note that this is not a WebTrends product. It is not endorsed or supported by WebTrends. I'd be happy to help provide some help if you are having difficulty using it. Even if you don't use WebTrends, but your analytics package provides you with geography data that you'd like to map, you're welcome to use my template, or grab Jeffrey's original template and hack away.

My template includes a few changes to the original:

- I created "groups" which allow a categorization of the data for different colored icons. I set them to greater than 1%, .05-1% and less than .05%. Feel free to modify those values in the macro if you need to - I've highlighted the two areas that need to be changed with a *** in the comments

- I have modified the instruction box to note that you should sort the data by visits (and pageviews) before mapping it. The reason is that the WebTrends CSV export sorts the data by geography first. If you have a large dataset, you may miss other geographies unless you sort the data by visits first.

- The macro is looking for a few specific items that are in the WebTrends CSV exported file. If you're not using WebTrends, and are having difficulty making it work, try Jeffrey's original template.

Let me know if you have any feedback or questions on this.

Thursday, October 27, 2005

Managing an Operation

Running an internet-based service is exhausting, as I'm sure many of us in the web analytics world can attest. With careful planning and a watchful eye to the metrics of your environment, you can stay ahead of the game and avoid outages.

The folks over at Typepad have had some operational pains recently. According to their apology, they are moving systems between data centers (running out of space, power, etc.), and have experienced some outages in hardware that they've never had problems with before. They mention storage equipment and network equipment among the recent failures. Ouch. All this on top of 10-20% growth in bandwidth each month (currently running at 250Mbps - impressive!). They are modifying and fixing the airplane while it is in flight!

Customers, observers and even Robert Scoble have commented on this issue. Most of the press has been very generous toward the Six Apart team, especially given that they were getting many complaints about the performance of the service.

I've been a Movable Type customer for a couple of years. Six Apart develops terrific software, and they've migrated their software to a hosted service extremely well. I'm sure they're going to quickly overcome the issues they've faced, and the service will be stronger for it. It's very impressive how they continue to innovate, grow very quickly, and still communicate well with their customers.

The folks over at Typepad have had some operational pains recently. According to their apology, they are moving systems between data centers (running out of space, power, etc.), and have experienced some outages in hardware that they've never had problems with before. They mention storage equipment and network equipment among the recent failures. Ouch. All this on top of 10-20% growth in bandwidth each month (currently running at 250Mbps - impressive!). They are modifying and fixing the airplane while it is in flight!

Customers, observers and even Robert Scoble have commented on this issue. Most of the press has been very generous toward the Six Apart team, especially given that they were getting many complaints about the performance of the service.

I've been a Movable Type customer for a couple of years. Six Apart develops terrific software, and they've migrated their software to a hosted service extremely well. I'm sure they're going to quickly overcome the issues they've faced, and the service will be stronger for it. It's very impressive how they continue to innovate, grow very quickly, and still communicate well with their customers.

Filed in: operations

Wednesday, October 26, 2005

Do I Have Your Attention?

I'm intrigued by AttentionTrust's efforts on storing clickstream data. It's obviously very similar data to that which we collect to provide web analytics reporting. It is a different frame of reference though in that they are putting control in the hands of each visitor.

The information collected would be extremely valuable to marketers. What might Amazon "suggest" I consider looking at knowing that I read various Web Analytics and Search sites? Surely, topical books by Eric and John would magically appear as I browse the site. And that's without even logging in or previously visiting Amazon as far as they know (because, of course, I've deleted my cookies ;-)

According to the AttentionTrust site, there is still only one demo service provider collecting attention data. As they've noted, they have a chicken/egg problem: they need service providers and they need individuals to use the service.

So who gets it all started? Does it require a big player like Amazon to throw its weight behind the effort? Does the omnipresent Google get involved to add credibility, and balance doing no evil with collecting all information about everyone (of course...they already do this...without needing to share!)?

Or does the effort get a grassroots kick start? For example, could del.icio.us become a trusted service? Many individuals already trust del.icio.us to store some of their web history (made even easier to do with Flock). Wouldn't it be great to have them collect all of my clickstream data, allowing me to annotate (tag) particular pages of interest as I go (a browser plugin perhaps)? Then as I visit other sites, my del.icio.us username could be sent in the header of each request, allowing those sites to "see" my interests, for which I would be rewarded with generous discounts and offers - right?

Analytics products might be looking for new sources of information in this new world. There are still plenty of important metrics to be captured per web property, but there would be much deeper visitor data available to mine, given that we can solve a plethora of privacy issues first!

Stan James wrote the AttentionTrust ATX (firefox plugin). He's also the author of Outfoxed, which is a very interesting mashup of social networks and web interaction. He's taken the concept of trust to a different level, allowing folks to define other individuals they trust, to allow them a richer experience when searching and browsing. Very cool stuff.

The information collected would be extremely valuable to marketers. What might Amazon "suggest" I consider looking at knowing that I read various Web Analytics and Search sites? Surely, topical books by Eric and John would magically appear as I browse the site. And that's without even logging in or previously visiting Amazon as far as they know (because, of course, I've deleted my cookies ;-)

According to the AttentionTrust site, there is still only one demo service provider collecting attention data. As they've noted, they have a chicken/egg problem: they need service providers and they need individuals to use the service.

So who gets it all started? Does it require a big player like Amazon to throw its weight behind the effort? Does the omnipresent Google get involved to add credibility, and balance doing no evil with collecting all information about everyone (of course...they already do this...without needing to share!)?

Or does the effort get a grassroots kick start? For example, could del.icio.us become a trusted service? Many individuals already trust del.icio.us to store some of their web history (made even easier to do with Flock). Wouldn't it be great to have them collect all of my clickstream data, allowing me to annotate (tag) particular pages of interest as I go (a browser plugin perhaps)? Then as I visit other sites, my del.icio.us username could be sent in the header of each request, allowing those sites to "see" my interests, for which I would be rewarded with generous discounts and offers - right?

Analytics products might be looking for new sources of information in this new world. There are still plenty of important metrics to be captured per web property, but there would be much deeper visitor data available to mine, given that we can solve a plethora of privacy issues first!

Stan James wrote the AttentionTrust ATX (firefox plugin). He's also the author of Outfoxed, which is a very interesting mashup of social networks and web interaction. He's taken the concept of trust to a different level, allowing folks to define other individuals they trust, to allow them a richer experience when searching and browsing. Very cool stuff.

Wednesday, October 19, 2005

LiquidSculpture Analysis - BoingBoing Bounce

In the months and weeks leading up to the "event", those visitors who were lucky enough to find LiquidSculpture were among a select few internet travelers to come across this pioneering work.

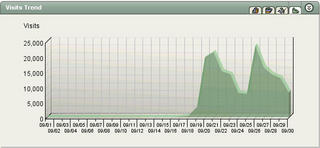

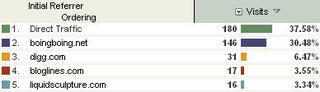

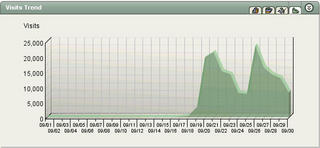

In August and through most of September, Martin's site averaged around 30 visits per day. On September 19, BoingBoing posted a link to the site and since then, things haven't quite been the same. Traffic spiked up to 20,000 visits on that day, and has bounced around quite a lot in these subsequent weeks. Note this graph showing the number of daily visits across the month of September...yes, there are visits for the first 18 days of the month...they are obviously dwarfed by the traffic late in the month. BoingBoing's post was clearly bringing visitors to the site.

In August and through most of September, Martin's site averaged around 30 visits per day. On September 19, BoingBoing posted a link to the site and since then, things haven't quite been the same. Traffic spiked up to 20,000 visits on that day, and has bounced around quite a lot in these subsequent weeks. Note this graph showing the number of daily visits across the month of September...yes, there are visits for the first 18 days of the month...they are obviously dwarfed by the traffic late in the month. BoingBoing's post was clearly bringing visitors to the site.

What's extraordinary about the nature of the reach of sites like BoingBoing is how quickly posts are spread through other bloggers. Many small sites picked up the post, as did some larger sites. As you can see from the visits graph above, traffic tapered off after a day or two from BoingBoing as it was no longer on the home page. But enough other smaller blogs were able to make up the difference and then some through the next few days.

Location, location, location

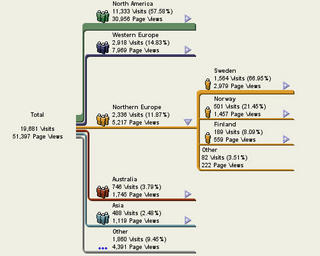

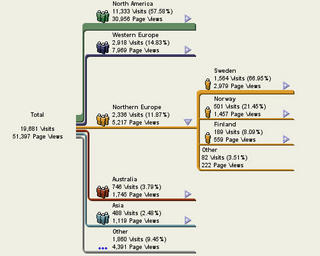

So, who are these visitors? Are blog-referred (blogerred?) visitors any different than other visitors? As BoingBoing is savvy enough to post their own stats, we know that the site is viewed by over 2M unique visitors per month. Unfortunately, their "countries" report is broken at the moment, so we can't determine the geographical distribution of their reach directly. But here's a geography drilldown report showing where the visitors to LiquidSculpture were coming from on September 20 with Western Europe expanded to show how many visits were from Sweden!

So, who are these visitors? Are blog-referred (blogerred?) visitors any different than other visitors? As BoingBoing is savvy enough to post their own stats, we know that the site is viewed by over 2M unique visitors per month. Unfortunately, their "countries" report is broken at the moment, so we can't determine the geographical distribution of their reach directly. But here's a geography drilldown report showing where the visitors to LiquidSculpture were coming from on September 20 with Western Europe expanded to show how many visits were from Sweden!

Referrers make all the difference

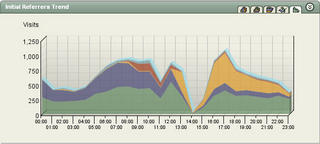

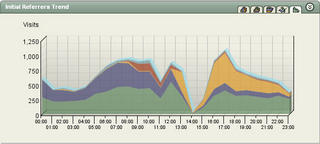

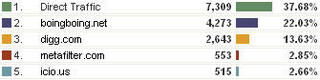

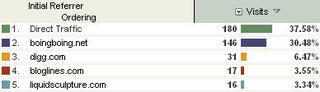

When analyzing blog/RSS data, one very important metric is referrers. Bloggers should keep a close eye on who is driving traffic to their site. The graph below is terrific as it shows a few things pretty clearly. 1) Make sure your ISP is ready for peak traffic! As you can see, there was a bit of a drop (ok...a complete drop) in traffic as Martin's ISP responded to the load of the day. 2) The referring traffic mix changed over the day as MetaFilter then Digg followed up on the BoingBoing post. 3) Many visitors followed up by posting tags to Del.icio.us, where it became one of the popular items for the day.

Another thing that jumps out when analyzing this data: Why are there so many "direct traffic" referrers? Are people actually typing in the URL into their browser? Or getting an email and clicking on a link (from Outlook or some other non-browser based email tool)? I believe it's something new that web analytics tools are only beginning to comprehend properly - these visitors are looking at sites like BoingBoing through RSS/news readers. They are clicking on the link from within their RSS reader and opening a new browser...thus no referrer. Note that you do get a referrer from visitors using web-based browsers like Bloglines (Bloglines was the 8th most common referrer for September 20th - the highest referrer for any feed aggregator - I sure wish we knew which readers folks were using!).

Side Bar

Note that LiquidSculpture has not been picked up by slashdot, but if it is, referrers will be much easier to measure. Slashdot does not provide links in their feeds, so readers who wish to find out more information about a referenced site need to first go through slashdot. Smart marketing? Hmmmm. Regardless, it is definitely true that BoingBoing is not completely accurately measuring their readership (they don't know if I read an article or not, or visit a link that they reference), nor can sites accurately know how much BoingBoing traffic has been referred to them.

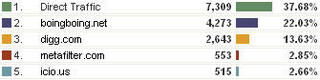

Money matters

Of course, Martin does sell his unique art, but he does not have any commerce mechanism directly on his site. Visitors to his "ordering" page can email him to make orders, or visit cafepress for a few items they distribute for him. I was curious whether BoingBoing readers were more or less likely to visit the ordering page, so I ran a report to breakdown visits to the ordering page by initial referrer to the site. As you can see, even though BoingBoing readers directly accounted for 22% of the visits to the site (from the stats above), they accounted for 30% of the visits to the ordering page. MetaFilter visitors and Digg visitors were less likely to visit the ordering page based on overall visits from those sites.

What about the browser war?

And the browser of choice for all of these fabulous new visitors? Let's just say it isn't IE - which only accounted for 33% of the visits on September 20. Firefox led the way with over 50% of the visits, and Safari came in 3rd at 10% of the visits. I've been pondering why the number of Firefox visits is so much higher than what BoingBoing sees, but their stats are showing hits and I'm using visits, so it's obviously not a perfect comparison. Also, I imagine that a lot of RSS readers send the agent string for IE + the reader name, so they probably get lumped into the IE stats.

Let me know if you have any comments or questions about this data. Many thanks to Martin for the wonderful art, access to some excellent data, and for putting up with my endless questions!

In August and through most of September, Martin's site averaged around 30 visits per day. On September 19, BoingBoing posted a link to the site and since then, things haven't quite been the same. Traffic spiked up to 20,000 visits on that day, and has bounced around quite a lot in these subsequent weeks. Note this graph showing the number of daily visits across the month of September...yes, there are visits for the first 18 days of the month...they are obviously dwarfed by the traffic late in the month. BoingBoing's post was clearly bringing visitors to the site.

In August and through most of September, Martin's site averaged around 30 visits per day. On September 19, BoingBoing posted a link to the site and since then, things haven't quite been the same. Traffic spiked up to 20,000 visits on that day, and has bounced around quite a lot in these subsequent weeks. Note this graph showing the number of daily visits across the month of September...yes, there are visits for the first 18 days of the month...they are obviously dwarfed by the traffic late in the month. BoingBoing's post was clearly bringing visitors to the site.What's extraordinary about the nature of the reach of sites like BoingBoing is how quickly posts are spread through other bloggers. Many small sites picked up the post, as did some larger sites. As you can see from the visits graph above, traffic tapered off after a day or two from BoingBoing as it was no longer on the home page. But enough other smaller blogs were able to make up the difference and then some through the next few days.

Location, location, location

So, who are these visitors? Are blog-referred (blogerred?) visitors any different than other visitors? As BoingBoing is savvy enough to post their own stats, we know that the site is viewed by over 2M unique visitors per month. Unfortunately, their "countries" report is broken at the moment, so we can't determine the geographical distribution of their reach directly. But here's a geography drilldown report showing where the visitors to LiquidSculpture were coming from on September 20 with Western Europe expanded to show how many visits were from Sweden!

So, who are these visitors? Are blog-referred (blogerred?) visitors any different than other visitors? As BoingBoing is savvy enough to post their own stats, we know that the site is viewed by over 2M unique visitors per month. Unfortunately, their "countries" report is broken at the moment, so we can't determine the geographical distribution of their reach directly. But here's a geography drilldown report showing where the visitors to LiquidSculpture were coming from on September 20 with Western Europe expanded to show how many visits were from Sweden!Referrers make all the difference

When analyzing blog/RSS data, one very important metric is referrers. Bloggers should keep a close eye on who is driving traffic to their site. The graph below is terrific as it shows a few things pretty clearly. 1) Make sure your ISP is ready for peak traffic! As you can see, there was a bit of a drop (ok...a complete drop) in traffic as Martin's ISP responded to the load of the day. 2) The referring traffic mix changed over the day as MetaFilter then Digg followed up on the BoingBoing post. 3) Many visitors followed up by posting tags to Del.icio.us, where it became one of the popular items for the day.

Another thing that jumps out when analyzing this data: Why are there so many "direct traffic" referrers? Are people actually typing in the URL into their browser? Or getting an email and clicking on a link (from Outlook or some other non-browser based email tool)? I believe it's something new that web analytics tools are only beginning to comprehend properly - these visitors are looking at sites like BoingBoing through RSS/news readers. They are clicking on the link from within their RSS reader and opening a new browser...thus no referrer. Note that you do get a referrer from visitors using web-based browsers like Bloglines (Bloglines was the 8th most common referrer for September 20th - the highest referrer for any feed aggregator - I sure wish we knew which readers folks were using!).

Side Bar

Note that LiquidSculpture has not been picked up by slashdot, but if it is, referrers will be much easier to measure. Slashdot does not provide links in their feeds, so readers who wish to find out more information about a referenced site need to first go through slashdot. Smart marketing? Hmmmm. Regardless, it is definitely true that BoingBoing is not completely accurately measuring their readership (they don't know if I read an article or not, or visit a link that they reference), nor can sites accurately know how much BoingBoing traffic has been referred to them.

Money matters

Of course, Martin does sell his unique art, but he does not have any commerce mechanism directly on his site. Visitors to his "ordering" page can email him to make orders, or visit cafepress for a few items they distribute for him. I was curious whether BoingBoing readers were more or less likely to visit the ordering page, so I ran a report to breakdown visits to the ordering page by initial referrer to the site. As you can see, even though BoingBoing readers directly accounted for 22% of the visits to the site (from the stats above), they accounted for 30% of the visits to the ordering page. MetaFilter visitors and Digg visitors were less likely to visit the ordering page based on overall visits from those sites.

What about the browser war?

And the browser of choice for all of these fabulous new visitors? Let's just say it isn't IE - which only accounted for 33% of the visits on September 20. Firefox led the way with over 50% of the visits, and Safari came in 3rd at 10% of the visits. I've been pondering why the number of Firefox visits is so much higher than what BoingBoing sees, but their stats are showing hits and I'm using visits, so it's obviously not a perfect comparison. Also, I imagine that a lot of RSS readers send the agent string for IE + the reader name, so they probably get lumped into the IE stats.

Let me know if you have any comments or questions about this data. Many thanks to Martin for the wonderful art, access to some excellent data, and for putting up with my endless questions!

Filed in: liquidsculpture analysis

Wednesday, October 12, 2005

LiquidSculpture Analysis - AOL impact

Martin cornered me yesterday and said, "I've got three little letters for you...AOL". His LiquidSculpture site was featured on the front page of AOL. Very cool.

Of course, that meant another bump in traffic. What was the impact? Not as much as we had thought it might be. You can see from this hourly graph that there was definitely an uptick in visits when the page was featured on the site.

Of course, that meant another bump in traffic. What was the impact? Not as much as we had thought it might be. You can see from this hourly graph that there was definitely an uptick in visits when the page was featured on the site.

The traffic tapered off pretty quickly though, and although the link is still available on a second-level page of AOL, it obviously isn't generating as much interest as yesterday.

We're puzzled by one aspect of this traffic though, and that is with the lack of referrers. We assume that AOL is stripping the referrer information out of the requests. They've been known to be creative with their customer's traffic, and of course, it is their network, so they can do whatever they want!

And how did LiquidSculpture end up on AOL's homepage? Martin has no idea. Someone obviously saw his site on BoingBoing or Fark or the NYTimes. I think we should start a competition though. We'll call it "MakeMeHotOrNot". We'll invite all of the portals and search engines to try to drive traffic to his site, and we'll measure it. Who do you think would drive the most?

Of course, that meant another bump in traffic. What was the impact? Not as much as we had thought it might be. You can see from this hourly graph that there was definitely an uptick in visits when the page was featured on the site.

Of course, that meant another bump in traffic. What was the impact? Not as much as we had thought it might be. You can see from this hourly graph that there was definitely an uptick in visits when the page was featured on the site.The traffic tapered off pretty quickly though, and although the link is still available on a second-level page of AOL, it obviously isn't generating as much interest as yesterday.

We're puzzled by one aspect of this traffic though, and that is with the lack of referrers. We assume that AOL is stripping the referrer information out of the requests. They've been known to be creative with their customer's traffic, and of course, it is their network, so they can do whatever they want!

And how did LiquidSculpture end up on AOL's homepage? Martin has no idea. Someone obviously saw his site on BoingBoing or Fark or the NYTimes. I think we should start a competition though. We'll call it "MakeMeHotOrNot". We'll invite all of the portals and search engines to try to drive traffic to his site, and we'll measure it. Who do you think would drive the most?

Tuesday, October 11, 2005

How Prescient Art Can Be...

Andy Grove used to say (and perhaps he still does!) that only the paranoid survive. Google is helping us paranoid folks survive! I love this view of the future. Although, given Google's track record, I'd say it will be more like 2010 when all of these services should be available (beta starting next year of course!).

Thanks James!

Thanks James!

Sunday, October 09, 2005

Blog Analytics Tool - MyBlogLog

MyBlogLog is a simple tool that provides bloggers with a very straight-forward way to determine what external links their visitors are choosing.

Blogs and feeds have different requirements for analytics than most web properties. MyBlogLog helps answer an important question for bloggers: What external links are my visitors choosing? MyBlogLog provides their customers with some javascript to allow them to wrap an onclick event around external links.

Good idea. It probably needs to get wrapped into a larger web analytics offering to provide richer overall traffic data. But for a free tool that's so easy to deploy, this is a smart start for bloggers who would like to understand more about where their site is driving traffic.

Blogs and feeds have different requirements for analytics than most web properties. MyBlogLog helps answer an important question for bloggers: What external links are my visitors choosing? MyBlogLog provides their customers with some javascript to allow them to wrap an onclick event around external links.

Good idea. It probably needs to get wrapped into a larger web analytics offering to provide richer overall traffic data. But for a free tool that's so easy to deploy, this is a smart start for bloggers who would like to understand more about where their site is driving traffic.

Filed in: blog technology

Thursday, October 06, 2005

Paying (for) Attention

For awhile now I've been watching a parallel analytics field getting started and I believe we ought to be discussing the implications within the web analytics world. I'm talking about attention. It's based on a specification that the fine folks at Technorati started, with many others joining them in the definition process, but no analytics vendors as far as I can tell.

In short, the idea behind attentiontrust is that my analytics data (called attention data) is mine. Here are the "rights" as defined by attention.org:

Property: You own your attention and can store it wherever you wish. You have CONTROL.

Mobility:You can securely move your attention wherever you want whenever you want to. You have the ability to TRANSFER your attention.

Economy: You can pay attention to whomever you wish and receive value in return. Your attention has WORTH.

Transparency: You can see exactly how your attention is being used. You can DECIDE who you trust.

Expect to see some interesting work around this area. A local Portland company, Attensa, has released a slick RSS reader for Outlook, and is working on other tools to help folks manage their feeds and leverage the attention spec.

At Web2.0 this week, the attentiontrust folks released a Firefox recorder extension that sends your attention data to the attention service you choose. It seems clear that this is an early attempt to get this tool out to kick the tires a bit. The tool sends your "clickstream" data to a "trusted" service. The only service available currently is with acmeattentionservice (who I believe should add a bit more to their website if they want to be perceived as trusted!).

I'll talk more about the underlying technology in a subsequent post. If you'd like to understand more about what the founders of attentiontrust are thinking, check out this very long but quite informative article.

Note too that Paul Miller and Michael Arrington over at Tech Crunch have written about attentiontrust's efforts this week.

In short, the idea behind attentiontrust is that my analytics data (called attention data) is mine. Here are the "rights" as defined by attention.org:

Property: You own your attention and can store it wherever you wish. You have CONTROL.

Mobility:You can securely move your attention wherever you want whenever you want to. You have the ability to TRANSFER your attention.

Economy: You can pay attention to whomever you wish and receive value in return. Your attention has WORTH.

Transparency: You can see exactly how your attention is being used. You can DECIDE who you trust.

Expect to see some interesting work around this area. A local Portland company, Attensa, has released a slick RSS reader for Outlook, and is working on other tools to help folks manage their feeds and leverage the attention spec.

At Web2.0 this week, the attentiontrust folks released a Firefox recorder extension that sends your attention data to the attention service you choose. It seems clear that this is an early attempt to get this tool out to kick the tires a bit. The tool sends your "clickstream" data to a "trusted" service. The only service available currently is with acmeattentionservice (who I believe should add a bit more to their website if they want to be perceived as trusted!).

I'll talk more about the underlying technology in a subsequent post. If you'd like to understand more about what the founders of attentiontrust are thinking, check out this very long but quite informative article.

Note too that Paul Miller and Michael Arrington over at Tech Crunch have written about attentiontrust's efforts this week.

Filed in: attention

Wednesday, October 05, 2005

Amazing photography and case study

I'll run a few articles on a new case study that has quite literally dropped into our laps. We're going to keep tabs on an analytics event as it unfolds, triggered through a slashdot effect (although this one was kicked off by boingboing). The impact is quite fantastic.

But first, let's talk about art. Martin Waugh is a colleague of mine (and a heck of a guy to boot) who has managed to develop several terrific photography jigs to take some truly wonderful photographs of drops. His work has recently been featured on boingboing, fark, yahoo picks (they did a very nice write-up), the nytimes, and of course, by our local WWeek.

Enjoy the great photography. I'll talk more about what's been happening to his traffic in the past few weeks since the boingboing bounce (a newly coined phrase!).

Here are a few samples:

Check them all out on Martin's site (http://www.liquidsculpture.com).

But first, let's talk about art. Martin Waugh is a colleague of mine (and a heck of a guy to boot) who has managed to develop several terrific photography jigs to take some truly wonderful photographs of drops. His work has recently been featured on boingboing, fark, yahoo picks (they did a very nice write-up), the nytimes, and of course, by our local WWeek.

Enjoy the great photography. I'll talk more about what's been happening to his traffic in the past few weeks since the boingboing bounce (a newly coined phrase!).

Here are a few samples:

Check them all out on Martin's site (http://www.liquidsculpture.com).

Email/blog etiquette

off topic...

Tim O'Reilly noted that he had received an email with the following note as part of the signature:

this email is: [ ] bloggable [x] ask first [ ] private

Apparently this signature has been described previously by Ross Mayfield. I've said similar things in previous emails, but hadn't thought of how to keep it simple like this. Good call.

Tim O'Reilly noted that he had received an email with the following note as part of the signature:

this email is: [ ] bloggable [x] ask first [ ] private

Apparently this signature has been described previously by Ross Mayfield. I've said similar things in previous emails, but hadn't thought of how to keep it simple like this. Good call.

Tuesday, October 04, 2005

Scaling a startup

Richard McManus has noted that Bloglines is losing ground in the RSS world, noting that they are "already no longer the market darling amongst bloggers". I like the simplicity of Bloglines and use it almost daily to keep tabs (literally!) on the world.

Richard's complains that Bloglines isn't adding new features fast enough. I agree that vendors need to keep their first-to-market edge. Bloglines has a large user-base and many of us a very devoted fans.

Mark Fletcher started Bloglines after already developing another excellent program, ONElist, which Yahoo bought (and is now well known to many people: Yahoo Groups). Mark responded directly to a post from Russell Beattie saying that Bloglines has been "...working hard on the back-end of the system." Russell was also noting that Bloglines has been "...really suffering since it got bought by Ask Jeeves".

The issue at this moment for Bloglines seems to be one of scaling. This is what I'd like to talk about now.

Developing a web application is relatively easy these days. Especially with the fantastic tools available and access to data ala web 2.0-style apps. Making the application scale requires a bit more work. Really making it scale requires a lot more work.

I don't know Mark, but I'm sure he knew this when he started. He had previously built, released and subsequently sold an amazing tool. He must have gone through the startup scaling lifecycle (I'll talk more about this in a subsequent post). Given what he knew, and given that he must have had access to at least a little bit of money to help get Bloglines off the ground, it's interesting to note that he still built Bloglines on the cheap.

In March of this year, Mark presented to eTech. I didn't attend the presentation, but I read through his materials and really enjoyed his approach. In his presentation materials he talked about his "garage philosophy" of startups. He talked about using "cheap technologies" and one note that I really liked was to "release early/release often". I believe a lot of folks have this same approach, and it serves them very well at the start.

What happens next though? Can a startup application scale quickly for rapid adoption as Bloglines has, then successfully transition to a truly scalable and stable architecture?

Again, I don't know Mark. But I do like that he's sold Bloglines to Ask Jeeves and he's still actively involved with the business - especially now that they have to do the less-than-glamorous job of trying to make the tool scalable and stable. I hope he/they succeed!

Richard's complains that Bloglines isn't adding new features fast enough. I agree that vendors need to keep their first-to-market edge. Bloglines has a large user-base and many of us a very devoted fans.

Mark Fletcher started Bloglines after already developing another excellent program, ONElist, which Yahoo bought (and is now well known to many people: Yahoo Groups). Mark responded directly to a post from Russell Beattie saying that Bloglines has been "...working hard on the back-end of the system." Russell was also noting that Bloglines has been "...really suffering since it got bought by Ask Jeeves".

The issue at this moment for Bloglines seems to be one of scaling. This is what I'd like to talk about now.

Developing a web application is relatively easy these days. Especially with the fantastic tools available and access to data ala web 2.0-style apps. Making the application scale requires a bit more work. Really making it scale requires a lot more work.

I don't know Mark, but I'm sure he knew this when he started. He had previously built, released and subsequently sold an amazing tool. He must have gone through the startup scaling lifecycle (I'll talk more about this in a subsequent post). Given what he knew, and given that he must have had access to at least a little bit of money to help get Bloglines off the ground, it's interesting to note that he still built Bloglines on the cheap.

In March of this year, Mark presented to eTech. I didn't attend the presentation, but I read through his materials and really enjoyed his approach. In his presentation materials he talked about his "garage philosophy" of startups. He talked about using "cheap technologies" and one note that I really liked was to "release early/release often". I believe a lot of folks have this same approach, and it serves them very well at the start.

What happens next though? Can a startup application scale quickly for rapid adoption as Bloglines has, then successfully transition to a truly scalable and stable architecture?

Again, I don't know Mark. But I do like that he's sold Bloglines to Ask Jeeves and he's still actively involved with the business - especially now that they have to do the less-than-glamorous job of trying to make the tool scalable and stable. I hope he/they succeed!

Monday, October 03, 2005

Dig it!

Dig is an old standby DNS utility. As internet technologies have matured, the use for dig has gone down, but it's still very useful for finding out who owns which domains or IP's and where they point on the internet.

I always forget what dig means, so of course, I consulted the DNS wiki, reminding me that dig is the "domain information groper". True name...I couldn't have made up anything better than that.

My favorite dig site is menandmice. Very simple, straight-forward and a good one to bookmark. Use it to confirm DNS configurations or find out what's going on behind the scenes on a site. To see an example of a fairly confusing DNS configuration, look up yahoo: http://www.menandmice.com/cgi-bin/DoDig?host=&domain=www.yahoo.com&type=A&recur=on. You'll see that they CNAME (or alias) their www site to Akamai, which has DNS servers all over the world (providing speedy, redundant and DOS-proof (mostly) DNS).

Why is this interesting? DNS is a core foundational component of the internet. All web requests must first be resolved by DNS. In order to make web analytics data collection fast and reliable, everyone must have a reliable DNS infrastructure and really understand how it works. Dig is a great tool for taking a closer look.

I always forget what dig means, so of course, I consulted the DNS wiki, reminding me that dig is the "domain information groper". True name...I couldn't have made up anything better than that.

My favorite dig site is menandmice. Very simple, straight-forward and a good one to bookmark. Use it to confirm DNS configurations or find out what's going on behind the scenes on a site. To see an example of a fairly confusing DNS configuration, look up yahoo: http://www.menandmice.com/cgi-bin/DoDig?host=&domain=www.yahoo.com&type=A&recur=on. You'll see that they CNAME (or alias) their www site to Akamai, which has DNS servers all over the world (providing speedy, redundant and DOS-proof (mostly) DNS).

Why is this interesting? DNS is a core foundational component of the internet. All web requests must first be resolved by DNS. In order to make web analytics data collection fast and reliable, everyone must have a reliable DNS infrastructure and really understand how it works. Dig is a great tool for taking a closer look.

Friday, September 30, 2005

NSA geo-location patent

According to news.com, the NSA has received a patent for identifying the physical location of a logical internet address (in short: we know where you are!).

The NSA only provides a little more information on their Network Geo-location Technology. Of course, if we really want to watch their 27 minute video, we'd probably need to arrange for a "personal visit" and fill out various forms, etc. Big brothers need big brothers too!

Thanks K!

The NSA only provides a little more information on their Network Geo-location Technology. Of course, if we really want to watch their 27 minute video, we'd probably need to arrange for a "personal visit" and fill out various forms, etc. Big brothers need big brothers too!

Thanks K!

Tuesday, September 27, 2005

fewer clicks, more clickthrough

DoubleClick posted some data that the NYTimes picked up yesterday, suggesting that fewer email recipients are clicking on emails (spam filters, or blocking the tracking images may be contributors), but of those that are clicking, there's better conversion.

From the DoubleClick press release:

“We have seen open rates declining for several quarters and we knew that that it was in part as a result of image blocking by ISPs and Outlook. What this in-depth review shows is that it isn’t one individual factor that is putting pressure on open rates but a combination of forces, and with this insight we can help marketers to better analyze and target their e-mails to various list segments,” said Kevin Mabley, Vice President of Account Management and Strategic Services for DoubleClick E-mail Solutions.

My thoughts:

Another contributor might be that their tracking cookie (from DARTmail) is set via a third party cookie, and there are more third party cookies getting blocked.

Also, the anti-spyware tools might be identifying and blocking the doubleclick tracking requests.

From the DoubleClick press release:

“We have seen open rates declining for several quarters and we knew that that it was in part as a result of image blocking by ISPs and Outlook. What this in-depth review shows is that it isn’t one individual factor that is putting pressure on open rates but a combination of forces, and with this insight we can help marketers to better analyze and target their e-mails to various list segments,” said Kevin Mabley, Vice President of Account Management and Strategic Services for DoubleClick E-mail Solutions.

My thoughts:

Another contributor might be that their tracking cookie (from DARTmail) is set via a third party cookie, and there are more third party cookies getting blocked.

Also, the anti-spyware tools might be identifying and blocking the doubleclick tracking requests.

Monday, September 26, 2005

Blogging and disclaimer