Take a look at the list of exhibitors and sponsors of Syndicate, going on right now in lovely San Francisco. How many web analytics vendors do you see on the list? None? Any bets on what that list might look like at next year's conference?

There are many companies there who are building analytics solutions. Take a look at some of the approaches these folks are taking. Very creative ideas indeed.

facebook sdk

google tag manager

Thursday, December 15, 2005

Tuesday, December 13, 2005

Web Analytics Data Changing User Behavior?

Many of us are familiar with providing web analytics data via an overlay. I believe Fireclick was first to this party with their Site Explorer tool...WebTrends has a product called SmartView...other vendors have their own versions as well. This rich analytics data has historically been viewed only by those using these analytics tools.

Whether or not you like the term Web 2.0, many of the new implementations of the web-as-a-platform are changing how we think of analytics. Ajax is changing how we think of tracking visitors, visits and views. API's are opening up everywhere, providing a healthy exchange of data. Del.icio.us is my favorite example of a disruptive tool, as they change our paradigms of saving, sharing and searching.

So, how about web analytics? What's new and interesting and disruptive in our world? Here's one approach that I like. Mybloglog.com, previously noted here, looks to have an API that shows a lot of promise. I can't find any information on their site about it, but you can see an example of what they're doing at this cool Del.icio.us tool site (hover over the links in the article).

Wow. Are visitors more likely to click on the links that are rated higher, thus changing visitor behavior? Perhaps so. Amazon has done this for years. Others do as well, but I've not seen someone directly using web analytics data to do this before. Are there other examples out there?

Whether or not you like the term Web 2.0, many of the new implementations of the web-as-a-platform are changing how we think of analytics. Ajax is changing how we think of tracking visitors, visits and views. API's are opening up everywhere, providing a healthy exchange of data. Del.icio.us is my favorite example of a disruptive tool, as they change our paradigms of saving, sharing and searching.

So, how about web analytics? What's new and interesting and disruptive in our world? Here's one approach that I like. Mybloglog.com, previously noted here, looks to have an API that shows a lot of promise. I can't find any information on their site about it, but you can see an example of what they're doing at this cool Del.icio.us tool site (hover over the links in the article).

Wow. Are visitors more likely to click on the links that are rated higher, thus changing visitor behavior? Perhaps so. Amazon has done this for years. Others do as well, but I've not seen someone directly using web analytics data to do this before. Are there other examples out there?

Filed in: analytics technology

Monday, December 12, 2005

Web Analytics and Feeds #1: Feedburner

First in a series on analyzing feeds...

Feedburner is an excellent service for bloggers. They provide some terrific tools to easily expand the presence of a blog. They have methods to help publicize, optimize, monitize, analyze and yes, even troubleshootize (honest!) your feed.

Analysis Tools

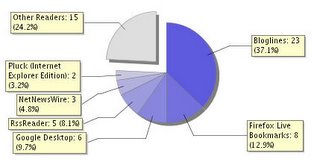

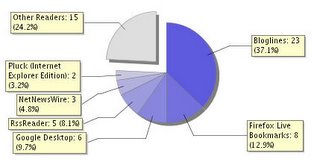

Their free analysis tools are fairly simple, but quite useful. Feed Circulation gives me an aggregate number of subscribers on a daily basis. Since they provide this stat through their Awareness API too, I have a widget on my desktop that gives me my updated number each day. They also provide a further breakdown of my Readership, showing the number of subscribers by reader. Note: now that 23 of you subscribe via Bloglines, according to nice folks over at Ask Jeeves...this is a blog that matters (although, they've probably upped the requirement since October's Web 2.0 conference :-)

They also provide a further breakdown of my Readership, showing the number of subscribers by reader. Note: now that 23 of you subscribe via Bloglines, according to nice folks over at Ask Jeeves...this is a blog that matters (although, they've probably upped the requirement since October's Web 2.0 conference :-)

Collecting Feed Subscribers

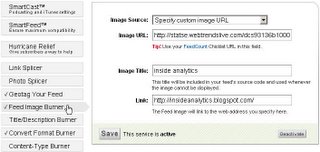

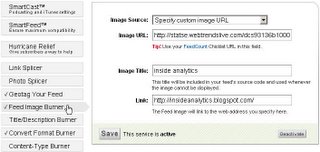

Getting this data from Feedburner is very handy, but I wanted to tie back my subscribers to the Feedburner feeds to my other analysis tools. There are a couple of ways to do this, but the simplest method is to add an image request into Feedburner's "Feed Image Burner", as shown below.

This is normally used to provide an image to readers when displaying articles, but I hijacked this image location to add an analytics image request. Geek note: In RSS 2.0, this image is in channel->image->url, in Atom, I believe the atom:logo element would work the same. In order to include requests for my feed in my web analytics data, I modified the "noscript" image request from my WebTrends code to insert into the "Image URL" field. The image request looks like this:

Cool. This gives me a separate breakdown in my WebTrends reports showing requests to the URI "Feedburner.feed". Requests to the image are made when someone actually reads the feed, not when the reader picks up the feed. (Note: I'll figure out an equivalent URL for MeasureMap and Google Analytics, and post that in case anyone is interested.)

Feedburner Issue

Back to Feedburner. I noticed an issue that I need to research further. I was originally using their "SmartFeed" service to translate the feed format to be compatible with the subscriber's application. It seemed to strip out the image, even though it showed it correctly inserted in the "XML Source" in the Feedburner UI. I disabled the SmartFeed service, and set the "Convert Format Burner" to RSS 2.0, and all worked great. I can now analyze when the feeds are viewed in a reader (for those readers that display an image of course).

compatible with the subscriber's application. It seemed to strip out the image, even though it showed it correctly inserted in the "XML Source" in the Feedburner UI. I disabled the SmartFeed service, and set the "Convert Format Burner" to RSS 2.0, and all worked great. I can now analyze when the feeds are viewed in a reader (for those readers that display an image of course).

More complications

There's more to this story. I now have some visibility into (at least a partial list of) who is reading my feeds. But, are they visiting the site? More on this conversion in a subsequent post...

Feedburner is an excellent service for bloggers. They provide some terrific tools to easily expand the presence of a blog. They have methods to help publicize, optimize, monitize, analyze and yes, even troubleshootize (honest!) your feed.

Analysis Tools

Their free analysis tools are fairly simple, but quite useful. Feed Circulation gives me an aggregate number of subscribers on a daily basis. Since they provide this stat through their Awareness API too, I have a widget on my desktop that gives me my updated number each day.

They also provide a further breakdown of my Readership, showing the number of subscribers by reader. Note: now that 23 of you subscribe via Bloglines, according to nice folks over at Ask Jeeves...this is a blog that matters (although, they've probably upped the requirement since October's Web 2.0 conference :-)

They also provide a further breakdown of my Readership, showing the number of subscribers by reader. Note: now that 23 of you subscribe via Bloglines, according to nice folks over at Ask Jeeves...this is a blog that matters (although, they've probably upped the requirement since October's Web 2.0 conference :-)Collecting Feed Subscribers

Getting this data from Feedburner is very handy, but I wanted to tie back my subscribers to the Feedburner feeds to my other analysis tools. There are a couple of ways to do this, but the simplest method is to add an image request into Feedburner's "Feed Image Burner", as shown below.

This is normally used to provide an image to readers when displaying articles, but I hijacked this image location to add an analytics image request. Geek note: In RSS 2.0, this image is in channel->image->url, in Atom, I believe the atom:logo element would work the same. In order to include requests for my feed in my web analytics data, I modified the "noscript" image request from my WebTrends code to insert into the "Image URL" field. The image request looks like this:

http://statse.webtrendslive.com/dcs93136b10000004rrmeb3jo_5u5i/njs.gif?

dcsuri=/Feedburner.feed&WT.js=No&WT.ti=FeedBurnerFeed

Cool. This gives me a separate breakdown in my WebTrends reports showing requests to the URI "Feedburner.feed". Requests to the image are made when someone actually reads the feed, not when the reader picks up the feed. (Note: I'll figure out an equivalent URL for MeasureMap and Google Analytics, and post that in case anyone is interested.)

Feedburner Issue

Back to Feedburner. I noticed an issue that I need to research further. I was originally using their "SmartFeed" service to translate the feed format to be

compatible with the subscriber's application. It seemed to strip out the image, even though it showed it correctly inserted in the "XML Source" in the Feedburner UI. I disabled the SmartFeed service, and set the "Convert Format Burner" to RSS 2.0, and all worked great. I can now analyze when the feeds are viewed in a reader (for those readers that display an image of course).

compatible with the subscriber's application. It seemed to strip out the image, even though it showed it correctly inserted in the "XML Source" in the Feedburner UI. I disabled the SmartFeed service, and set the "Convert Format Burner" to RSS 2.0, and all worked great. I can now analyze when the feeds are viewed in a reader (for those readers that display an image of course).More complications

There's more to this story. I now have some visibility into (at least a partial list of) who is reading my feeds. But, are they visiting the site? More on this conversion in a subsequent post...

Filed in: analytics feedburner rss

Friday, December 09, 2005

Google Transit - WA Wednesday

Another great idea from the "20% percent time" policy Google offers its engineers. This one pulls together transit schedules and Google Maps in a very slick mashup.

They chose Portland for the first launch. Those of us who live in Portland know the Tri-Met transit system is excellent. Google puts it this way in their blog:

Fantastic stuff. Oh, and if you're downtown next Wednesday evening and need to get to the Lucky Labrador for the Web Analytics Wednesday chat, this link might help.

Or, if you're out near Intel in Hillsboro today and want to get downtown to see the national champion Portland Pilots women's soccer team celebration at noon, try this.

They chose Portland for the first launch. Those of us who live in Portland know the Tri-Met transit system is excellent. Google puts it this way in their blog:

We chose to launch with the Portland metro area for a couple of reasons. Tri-Met, Portland's transit authority, is a technological leader in public transportation. The team at Tri-Met is a group of tremendously passionate people dedicated to serving their community. And Tri-Met has a wealth of data readily available that they were eager to share with us for this project. This combination of great people and great data made Tri-Met the ideal partner.

Fantastic stuff. Oh, and if you're downtown next Wednesday evening and need to get to the Lucky Labrador for the Web Analytics Wednesday chat, this link might help.

Or, if you're out near Intel in Hillsboro today and want to get downtown to see the national champion Portland Pilots women's soccer team celebration at noon, try this.

Tuesday, December 06, 2005

Kanoodle Has It Backwards

ClickZ reports that Kanoodle is going to pay publishers to distribute their "targeting" cookies. Kanoodle's BrightAds program says:

I'll give them an A for creativity, and for trying to amass an internet-visitor database as only Google can do right now (with Yahoo close behind).

However, this program is doomed for a couple of reasons. The most obvious reason is that third party cookies are dying a quick death. My own personal prediction is that third party cookies will be useless by the end of 2006. Since this program relies on third party cookies, they're data will be useless.

The second reason this effort will fail is because they (and others) won't be able to "own" visitor data...visitors will. AttentionTrust efforts will put preference data in the hands of visitors, which will fundamentally alter the advertising approach taken by these companies. 2006 will be an interesting time for Attention...

"Add our code to your page, and we'll drop cookies, so when a user visits another site in the Kanoodle network, we can show them ads that will appeal to them."

I'll give them an A for creativity, and for trying to amass an internet-visitor database as only Google can do right now (with Yahoo close behind).

However, this program is doomed for a couple of reasons. The most obvious reason is that third party cookies are dying a quick death. My own personal prediction is that third party cookies will be useless by the end of 2006. Since this program relies on third party cookies, they're data will be useless.

The second reason this effort will fail is because they (and others) won't be able to "own" visitor data...visitors will. AttentionTrust efforts will put preference data in the hands of visitors, which will fundamentally alter the advertising approach taken by these companies. 2006 will be an interesting time for Attention...

Monday, December 05, 2005

Privacy Policy for Inside Analytics

Your privacy is important to me. I do collect visitor information using a few different services, and experiment occaisionally with various tools. However, I will always adhere to the following privacy standards:

Note 1: The information below is equivalent to the following compact P3P (which I cannot set in the HTTP header using Blogger, but I do include this information in a meta tag in my template):

Note 2: My XML Privacy Report is available at this location: http://eblog.bugbee.org/w3c/p3p.xml. If you visit my "support" site with IE, you can see this file in IE's Privacy Report.

Please email me (elbpdx at gmail.com) if you have any questions about this post or this policy.

Note 1: The information below is equivalent to the following compact P3P (which I cannot set in the HTTP header using Blogger, but I do include this information in a meta tag in my template):

NON DSP COR ADM DEV PSA OUR IND UNI COM NAV STA INT

NON: Unfortunately, visitors do not have access to view the information I collect. However I will attempt to share aggregated information about the traffic to this site on a regular basis.

DSP: There may be some disputes about my privacy practices. I don't know of any...but there may be some.

COR: If there are any disputes, I will work to correct them.

ADM: Information collected will help me better administer this site.

DEV: Information collected will help me develop this site.

PSA: Information collected will help me understand (psuedo-analysis) the habits and interests of the visitors to this site, but will not be tied back to any individually identified data.

OUR: The information is ours (mine), and I keep it. It is not now, nor will it ever be, given to any third party.

IND: I plan on keeping the information I collect indefinitely.

UNI: I use unique ID's to identify visitors. This helps me understand visitor preferences over time.

COM: I collect limited information about visitor computer systems, browsers, operating systems, screen resolutions, software, etc., that is provided by the browser, or via javascript.

NAV: I collect navigation information, including pages visited, referrers and offsite links.

STA: I use cookies to maintain visitor identifiers and some limited state information.

INT: I collect search terms used to arrive at this site to better understand visitor traffic and navigation flow.

Note 2: My XML Privacy Report is available at this location: http://eblog.bugbee.org/w3c/p3p.xml. If you visit my "support" site with IE, you can see this file in IE's Privacy Report.

Please email me (elbpdx at gmail.com) if you have any questions about this post or this policy.

Filed in: privacy

Thursday, December 01, 2005

Measuring Visit Duration in Hours

It is definitely possible to go overboard with visitor tracking. I visited one site last week (I wish I saved the URL!!! Update: It's an applet on Information Week...check out this article on Google Analytics as an example...they're using an On24 flash applet to deliver News TV...the On24 applet collects data through Limelight...as irony would have it, On24 uses Google Analytics on their site) that sent a tracking hit every second! What could they possibly do with that information? I guess they believe they will know more precisely what each visit duration was. But once you start accounting for all of the data anomalies generated by visitors who keep their browser open longer than the actual visit occurred, the data is no longer precise at all.

There are some examples of when visit duration tracking could be very useful (and much more accurate), especially when bundled with a feature. As I type this article, Blogger's auto-save feature is sending a "status" hit every minute or so, which has the dual purpose of saving my draft as I type, and giving Blogger some pretty accurate information about how long folks take to write (and edit!) articles. (Geek sidebar: they use a GET request with the draft text stored in cookies...I wonder what happens when the article is larger than 4k bytes?).

Gmail uses a similar approach. Use the CustomizeGoogle Firefox extension with Google Suggest selected, and every letter you type in the search bar is sent to Google. Wow.

Here's a fun use of technology, brought to you by the advertising geniuses at Virgin Digital. Warning: If you like rock music, trivia, and have a few minutes to spare, be prepared to actually spend a few hours on this, and of course...they're watching how long you spend on the game (we've only found 62 of 74!).

There are some examples of when visit duration tracking could be very useful (and much more accurate), especially when bundled with a feature. As I type this article, Blogger's auto-save feature is sending a "status" hit every minute or so, which has the dual purpose of saving my draft as I type, and giving Blogger some pretty accurate information about how long folks take to write (and edit!) articles. (Geek sidebar: they use a GET request with the draft text stored in cookies...I wonder what happens when the article is larger than 4k bytes?).

Gmail uses a similar approach. Use the CustomizeGoogle Firefox extension with Google Suggest selected, and every letter you type in the search bar is sent to Google. Wow.

Here's a fun use of technology, brought to you by the advertising geniuses at Virgin Digital. Warning: If you like rock music, trivia, and have a few minutes to spare, be prepared to actually spend a few hours on this, and of course...they're watching how long you spend on the game (we've only found 62 of 74!).

Subscribe to:

Posts (Atom)